CBC’s Fifth Estate has put together a ranking of Canadian

hospitals and the results are out.

They provide a new online tool that grades hospitals on key performance

indicators reported by hospitals and justify it as a call for more accountability

and transparency in the Canadian health care system. Given the inevitable complexity of ranking hospitals with

differences in provincial health status and population characteristics, the

fact that they only are able to rank 239 out of 600 plus hospitals, and the

reluctance of most provinces and territories to provide some of the requested

information, it is a limited picture.

Yet, it is information that will be used.

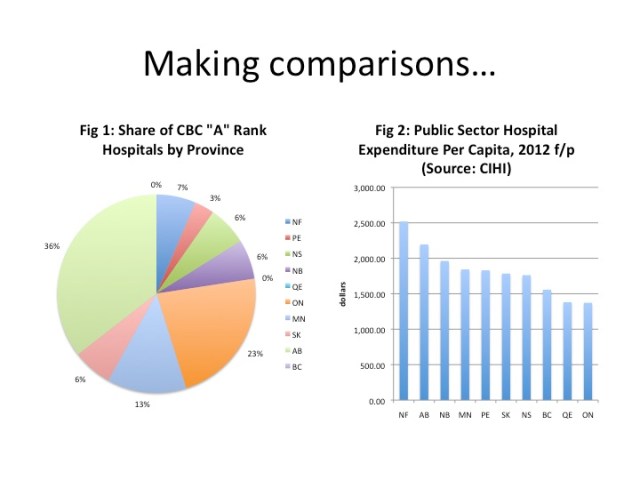

Here is one way it can be used. There are 31 hospitals that were ranked with A or A+ status.

How are these top hospitals distributed across the provinces? Figure 1 shows

that Alberta had 36 percent of these “A rank” hospitals – the largest share –

followed by Ontario at 23 percent, then Manitoba at 13 percent. British Columbia and Quebec have none

of their hospitals in the A rank category. From what I can tell, none of Quebec’s hospitals were given

a grade and were unrated as they had insufficient information reported. So, this is really not a complete

ranking of Canadian hospitals.

Despite this limitation, how does the share of “A ranks” compares to per capita

public sector hospital spending for 2012 as forecast by the Canadian Institute

for Health Information? Well,

Figure 2 shows that Newfoundland, Alberta and New Brunswick spend the most public dollars per

capita on hospitals while British Columbia, Quebec and Ontario spend the

least. Newfoundland, Alberta

and New Brunswick all have a share of the “A rank” hospitals at 7%, 36% and 6% that

is larger than their share of the national population at 1.5%, 10.9% and 2.2% respectively.

British Columbia and Ontario have a share of “A Ranks” of 0% and 23% respectively

and population shares of 13.1% and 38.4% respectively.

Inevitably, this type of simplistic comparison can be used

to suggest that spending more on health care and particularly hospital care inevitably leads to better

performance outcomes. That may or may not be

true in the case of hospital performance.

Unfortunately, the hospital ranking data is not complete enough to allow

us to draw those types of conclusions.

It’s a start, but much more needs to be done least of which is acquiring

a more complete set of data.

Related articles

I could quibble endlessly about this report (the sample size, data problems, and the fact there are a few real jaw-droppers in the A-B+ range that should be way lower), but let’s consider some more interesting issues with the indicators they used from CIHI.

1. 30-day readmission. This is a common quality metric (incurring a significant financial penalty in the US), and in the case of CIHI (unlike CMS in the US), they restricted this to patients in obstretics, adult surg, and adult medical, and patients 19 and under (I think that makes sense). The idea behind the 30-day measure is that patients getting proper care should be extremely unlikely to come back that soon. But if you look at the technical note on the measure, they did not (likely because the data wasn’t there) limit the readmission counts to either people with the same condition (i.e, last treatment didn’t work) or those with a related condition (e.g., postsurgical infection). More interesting is that there is recent discussion in the US about the need to adjust readmission data for mortality. If you die, you won’t be readmitted. If 100% of your patients die after discharge, you look good. (See this AHA publication for more detail.)

2. 30-day mortality after hospitalization for AMI. This is the rate of people who die within 30 days of being admitted for a serious heart attack. As CIHI notes (but CBC does not), this includes mortality for any reason. Since AMI patients are generally older, have many lifestyle and underlying health factors, the potential mortality causes are wider than they would be for, say, someone admitted for physical trauma form a workplace injury. More interesting is the research on low rates of medication compliance for AMI patients, which cannot be captured in the current system.

3. Administrative costs: this is a soft target and the CIHI (but again not CBC) site notes the difficulty in measuring this. What is interesting is that in most hospitals, clinical education services (updating staff on treatment improvements, teaching them to use new equipment, hand-washing campaigns) are coded as administrative. This is money well worth spending, but what sticks is the fat-cat story.

4. Changes in cost per weighted case: I read this several times and did not quite get it. But what the measure does not appear to include is the clinical outcome. Of course, we can all measure the cost of a drug, a bed, a consult, or an hour of physio. But what really matters is the cost of cure. There are very few systems in place where that is tracked.

I won’t go through them all, but my point is that the metrics (in addition the standard complaint about measurement problems) really are about significant stories that go beyond the hospitals, such that the quality rankings raise non-hospital issues. The readmission case raises a story about primary care access and patient education. The AMI mortality measure has a much bigger story about the surprisingly low rate of treatment adherence among people with serious illness. The other two measures are about our difficulty in tying financial measures to our most critical outcomes—the elimination or amelioration of disease.

Hi Shangwen:

I was hoping you would provide some comments on the report’s methodology. Appreciate the expertise.

I would strongly recommend :

a) to put 3 sigma error bars on all these comparisons

I have seen it so often, that people interpret stuff, which just seems to be significant, but is just noise, including some Gallup 40 000 participant study for a clothes retail chain. Some people have a big talent to “sharpen” the results.

I also remember that for a study of operation problems in German hospitals. I think I could find both cases again.

b) to be very careful to put any incentives / disincentives on outcomes, which are not absolutely clearly attributable

Wouldn’t it be better to count A rated beds per province? Maybe Newfoundland has smaller hospitals.

I was led to question the report’s accuracy, because the only hospital I looked up, the one that I use, had incorrect information listed. Misericordia Community Hospital in Edmonton, listed as having “No Emergency Department.” I can assure you that they do have an Emergency Department, since I have used it many times. While it’s only one data point, it was one of the few that I could verify, and its falsehood puts the whole report into question in my mind.

I will be fairly certain I’ve read this exact same type of declaration elsewhere, it should be gaining popularity with the people.