"He's a smart guy, almost as smart as he thinks he is…" Review on ratemyprofessors.com

The ratemyprofessor.com website comes in for a lot of criticism. Some allege that the reviews are bogus. Others argue that it provides no useful information for students, just laurels for hot, easy teachers. Another common criticism is that too few students post for the site to give useful information. Moreover, those who do post tend to be exceptionally happy or excessively disgruntled.

While these criticisms have merit, official teaching evaluations are also flawed. Official evaluations are likewise drawn from a selective sub-sample of students: those who drop, withdraw or simply don't bother to attend lecture often do not complete the evaluation form. Professors who grade generously score higher on official evaluations, too.

Ratemyprofessors.com has one big advantage over official evaluations: it is publicly accessible. Students use it because, often, there is no alternative source of information. Yet how reliable are the reviews on ratemyprofessors?

The University of Toronto student union publishes all undergraduate professors' teaching evaluations in its Anti-Calendar. I took a small sample (22) of economics professors, and matched their ratings on ratemyprofessors with their scores on the university's official teaching evaluations.

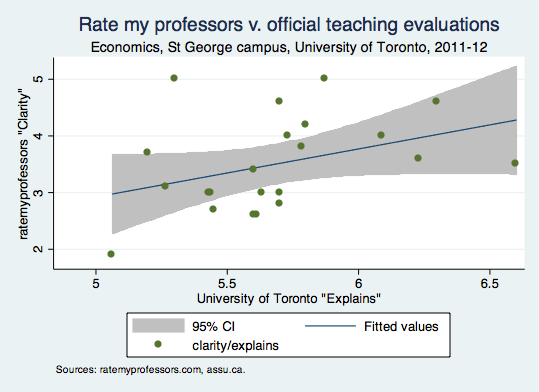

The immediate challenge I faced is that the official evaluations and ratemyprofessors measure quite different things. The closest match was between the ratemyprofessors category "clarity" (1=confusing, 5=crystal clear) and the University of Toronto category "explains" (Explains concepts clearly and with appropriate use of examples. 1=very poor, 7=outstanding). Even in this small sample, there was a statistically significant (p=0.08) relationship between the two sets of scores.

The correlation between the two is not perfect. However what is clear from reading the anti-calendar is that one professor's evaluations will vary a great deal from course to course, and even sometimes for sections within that course (in doing these calculations, I took a weighted average of evaluations in all undergraduate courses taught). Overall, I would say that the ratemyprofessors "clarity" score gives some information about a professor's official "explains" score.

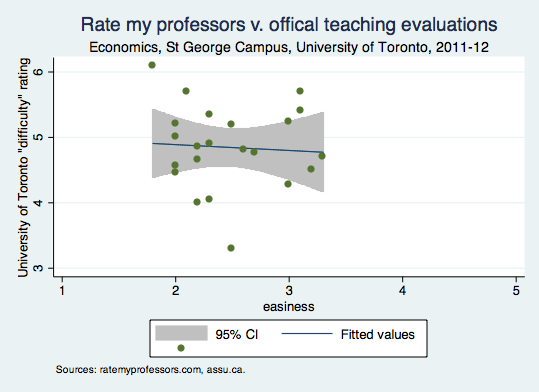

Another pair of concepts that one might expect to be correlated are the ratemyprofessors "easiness" score (1=hard, 5=easy) and the University of Toronto "difficulty" score ("Compared to other courses at the same level, the level of difficulty of the material is… 1=very low 7=very high"). The correlation between the two, however, is weak (r=-0.06) and one does not significantly predict the other:

I suspect that selection may be an issue here. The students who fill out the official form are the ones who found the course easy enough to stick with; the ratemyprofessors contributors include students who drop. This explains why the two are different, but raises the question: which one is more accurate?

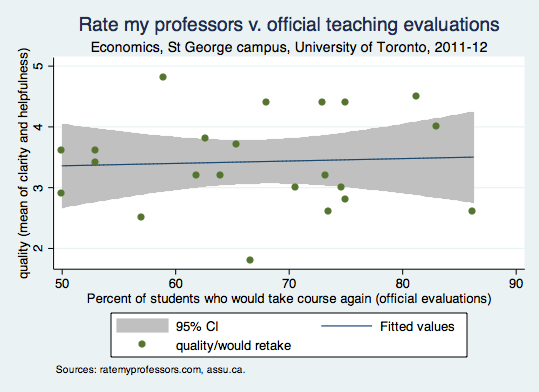

Ratemyprofessors has a third dimension along which it evaluates professors: helpfulness. Unfortunately there is no category that is an obvious match for helpfulness – such as availability for office hours, or willingness to answer questions – on the University of Toronto teaching evaluation form. As an alternative, I decided to compare the ratemyprofessors summary measure "quality" – an average of clarity and helpfulness – with one of the summary measures on the University of Toronto form. That form has a couple of summary measures. I used "Retake" or "Considering your experience with this course, and disregarding your need for it to meet program or degree requirements, would you still have taken this course?"

There is not much a relationship between the ratemyprofessors "quality" score and the University of Toronto "retake" score. It could be that the ratemyprofessors scores are flawed, or it could be that these are not comparable measures of teaching effectiveness. I strongly suspect that the retake question is confusing, and hard for students to answer.

Thomas Timmerman's much larger study of ratemyprofessors finds "the summary evaluation correlates highly with summary evaluations from an official university evaluation." Brown, Baillie and Fraser also find a strong (r=0.5) between official measures of clarity and helpfulness and ratemyprofessors scores – although interestingly they find, like I do, a weaker correlation between the official and the ratemyprofessor ease/difficulty scores.

Ratemyprofessors isn't perfect, but if it's the only publicly available source of information about the quality of instruction, people will use it. The only way to diminish its influence is to provide an alternative.

I basically agree with this (although at the U of C student evals are available to students, and Ratemyprofessors doesn’t seem to be much used) and I also basically agree that student evaluations are a good measure of professorial quality. Having said that, I don’t think they are a perfect or wholly satisfactory measure. When I began teaching I know that I taught my students a few things that were, well, wrong. But my students didn’t know that – they just liked that the wrong information was humorously and confidently delivered. I also think it is possible to game the evaluation process – e.g., 100% finals are good for course evaluations since the students don’t have any reason to feel cross with the professor yet. So I guess the question I would ask is whether even if Ratemyprofessors is as good a measure of professors as traditional evaluations, how good a measure is it overall, and are there any other measures that might be better. I am helpfully asking this question because I don’t have any answer to it.

Alice – “are there any other measures that might be better”

In US elementary and secondary schools there’s a lot of testing that’s designed to measure what students have actually learnt – either testing the students at the end of the year, or testing the students at the beginning of the year and at the end and measuring the change in performance.

When that’s been done at the post-secondary level, the results are not generally particularly encouraging – but then the counter-argument that gets made is “the standard tests are not measuring the critical thinking skills/latest developments in economic theory/.whatever that students are learning in my class.”

In some sense, before we can even start thinking about how to evaluate how well professors are doing their job, we have to work out what that job is, in other words, what a good professor should be doing/accomplishing/achieving. Which is not easy to do.

Frances, I’m just amazed that as a professor of economics with teaching, publishing, administrative, and I think, family obligations, you had time to do this analysis.

A number of years ago was a grad student rep on an accademic hiring committee – an experience I wouldnt recommend to anyone who does wish to make powerful enemies. I looked all the candidates up on ratemyprofessors.com – in conjunction with teaching evals that they had submitted. It was pretty useful if you know what to look for and dont take it too seriously. In particular, if students complained about high workload, difficult tests and marking practices – comments they did not intend to be compliamentary – I generally took it to mean this is probably a good professor.

Matthew – “if students complained about high workload…I generally took it to mean this is probably a good professor.”

One thing about the U of T scoring system that’s kind of funny/revealing is that “7” is usually the “best” outcome, i.e. outstanding levels of explaining, communication, teaching effectiveness. For workload and difficulty, though, “7” means “very high.” I have to wonder whether or not this doesn’t reflect a feeling on the part of whoever designed the survey that high workload and high difficulty is a good outcome!

“you had time to do this analysis”

I did have some help with the data gathering – plus this is part of my on-going project on academic salaries. This is partly written as a response to people who might say “but you can’t learn anything useful from ratemyprofessors.”

Sorry for a comment that is 95% off-topic. But I really like those shaded areas for the 95% CI. Really intuitive.

1. They are new to me. Is that just me?

2. What do you call them? Bow-ties? (Like candlesticks, only 2D instead of 1D.)

3. Why don’t they extend in both directions East-West? Is it just to keep them on the page?

4. If I drew a straight line, that asymptoted to the North-West curve, would that same line also asymptote to the South-East curve?

Nick – I think they’re probably a more micro thing. I don’t know exactly what they’re called. This is how I generated the graphs in stata:

twoway (lfitci VariableX VariableY) (scatter VariableX VariableY)

The linear fit line with the confidence intervals is generated by the “lftci” command, and then the scatter diagram is generated by the “scatter” command.

I think they’re just called confidence intervals – though bowtie is a good name.

This is how I think you should think about them: at the mid-point of the linear-fit line, the shaded area represents the confidence interval for the constant (intercept) term – I think, but it could be the confidence interval of the mean, and can’t find a good explanation on-line for how these are generated.

But there’s also a confidence interval for the slope of the line as well. If you can imagine taking a vertical band at the mid-point of the line, and then drawing through it all the possible lines that have slopes = the estimated coefficient on x plus or minus whatever the margin of error is, then you’d get the bowtie.

You may or may not understand this, Nick – one thing I’m very confident of, though, is that absolutely no one else reading this will get what I’m getting at! Hopefully someone else will take pity on us and explain coherently.

@Nick. Those 95% CIs are confusing me. I’ll admit my statistics is subpar, so please feel free to correct me if I’m wrong.

In the top diagram, it appears that more than 50% of observations fall outside the 95% CI. I would have expected that roughly 95% of the observations should fall within the 95% CI.

I may have had a comment eaten by the system – could you check Frances?

I have noticed an interesting pattern in my own (TA) teaching evaluations. I often have classes where I give the same class on Monday, Wednesday and Friday. I tend to get lower evaluation scores for my Wednesday course. Aggregated over the three courses that I have taught with this structure, the Wednesday scores are significantly lower than the Monday and Friday scores (which are statistically the same).

I have no idea whether I am more grumpy on Wednesdays, or it is a selection effect on the students. Students are able to choose which class they attend, so its possible Wednesday students are different from others.

Evan, here’s the regression output for the line shown on the first picture.

clarity | Coef. Std. Err. t P>|t| [95% Conf. Interval]

—————–+—————————————————————-

ExplainsvClarity | .848473 .464486 1.83 0.083 -.1204279 1.817374

_cons | -1.320147 2.64657 -0.50 0.623 -6.840796 4.200501

Evan, I think this is it but am not 100% sure: the mean of x in the first equation is 5.6, the standard error the mean is .077. The slope is 0.8, i.e. y=0.8x + constant. The slope has standard error of .46. The shaded area just shows all the possible lines that fit within the confidence intervals defined by those estimates/standard errors. Alternatively, it might be that it’s the standard error of the constant term in the equation and the standard error of the slope that together are generating this confidence interval – I’m going to have to try to dig through my many stata texts!

In general it won’t be the case that 95% of the points will fall within a 95% confidence interval. Think, e.g., of a regression done with census data – the confidence interval on a linear fit line will be tiny because the sample size is so huge, but there’s lots and lots of heterogeneity that’s associated with other things e.g. age, education, whatever, that won’t be explained by a simple linear regression.

Evan, I suspect that one reason your Wednesday class may have lower evaluations than Mondays or Fridays is that more students attend. If I teach a principles course with a class late on a Friday afternoon, I find that is the best time to administer evaluations. I think that is because only the more serious students sign up for a section with classes late on a Friday afternoon. Moreover, even if others show up, the less serious ones are less likely to be in class on a Friday afternoon.

Alice, there have been several alternatives proposed. An interesting one is contained in the following paper: http://www.tandfonline.com/toc/vece20/40/3#.UZ6GI6Pn_cs Basically it involves using student grades in subsequent courses as a measure of learning outcomes. E.g. controlling for other factors, students who learnt more with instructor A in principles of micro should do better in intermediate micro. There would be a couple of practical problems in this approach. One is that it could only be used in courses that are prerequisites for other courses. Another practical problem is that for smaller institutions, you would have to wait a number of years to get enough students going on to subsequent courses to get statistically significant outcomes. It is interesting to note, that the paper finds its measure of learning outcomes is unrelated to teaching evaluation scores.

Another approach is to have a colleague come in to evaluate your teaching. Of course, one problem with this approach is that people are likely to ask a friend to be the evaluator.

@Frances: Yes, that makes sense. The CI is a CI for the parameter estimates and not the underlying data. It’s been far too long since I last did any econometrics (obviously).

@Derek. I suspect you are correct about Fridays being different (the students who actually turn up on Fridays are more engaged). I’m not as sure that the same explanation can account for the difference between Mondays and Wednesdays though.

In either case, I think there is a strong case to be made for teaching evaluations being adjusted to account for the day/time of the class.

Evan ” I think there is a strong case to be made for teaching evaluations being adjusted to account for the day/time of the class.”

But there’s still scope for manipulation here, e.g. when a class runs from 8:30 to 11:30, it matters whether the evaluations are handed out at 8:30 or 11:20.

Or, to take another example: At Carleton we can do evaluations any time in the last two weeks of classes. I always do the evaluations as early as possible, that is, in the second-to-last week, so that the students who come to the last class just to find out what’s on the final exam don’t fill out the evaluations. I’m quite open with my larger undergrad classes about this policy, so if anyone actually cares enough to fill out the evaluation they can show up in the second-to-last week.

I mention this only to say that, even though these things matter, it’s hard to adjust for them.

By default, Stata’s -lfitci- computes confidence intervals that reflect the precision of the parameter estimates of the regression model. To get confidence intervals for an individual prediction accounting for the residual variance, simply add option “stdf”.

Frances, what do you think about having students fill out the forms online, at their own convenience, outside of class time? This is what occurs at my university, and I’m not sure whether it alleviates some of these selection biases or makes them worse.

Evan – I think the way to answer that question is to look at the number of students filling in the on-line form. I would think the on-line responses might be slightly closer to the RMP responses, in that the students who filled in the form would be those who felt strongly enough about the course. Although the on-line form might be more likely to be done by the conscientious and diligent students, which might bump up all profs’ average evaluations.

It would be interesting to look at the experience of universities who have switched from paper to on-line, and see how/if the typical prof’s evaluation has changed.

My university went through a transition from in-class paper evaluations to out-of-class online evaluations when I was a TA. I found that for the same courses and same material, the response rate fell and my total evaluation scores also fell.

My own explanation was that the in-class evaluations were biased upward for classes with no attendance policy, since students who did not like the class or professor were unlikely to attend. For the online evaluations, though, students who greatly dislike a class will see it as an opportunity to vent their frustration, and so were actually more likely to take the time than the median student.

The admin at my university wants to implement a system where students would have to fill out an online evaluation to get their grades. Personally, I think it is a bad idea for a number of reasons. One is the same reason I think mandatory voting is a bad idea. Just as mandatory voting makes it more difficult for people unfamiliar with the issues to just abstain, mandatory course evaluations mean that those who were not in class enough to have an informed opinion will be giving one. Of course, my opinion is in part due to my opinion/belief that most student absences have little to do with factors under the instructor’s control. If anyone knows of any research relating attendance to an instructor’s teaching, I would be interested.

Hi Frances, Nick and those interested in the shaded area around the regression line:

I am not familiar with Stata (I use R, SAS, and JMP for statistics), so I don’t know how that particular grey band was generated. However, it is important to note that a confidence INTERVAL measures the uncertainty of the estimate of one mean, while a confidence BAND measures the uncertainty of multiple estimated means. A 95% confidence interval has a 95% coverage probability for one point, but a 95% confidence band has a 95% SIMULTANEOUS coverage probability for all estimated points. Thus, for each individual point, a confidence band as a coverage probability greater than 95%, and a confidence band is wider than a confidence interval for each individual point. It is also good to note that a prediction interval is wider than a confidence interval, and a prediction band is wider than a confidence band.

I encourage you to look on Google for the notes by Yang Feng from Columbia University called “Simultaneous Inferences and Other Topics in Regression Analysis”, which uses an example from “Applied Linear Statistical Models” by Kutner et al. (4th edition). I used the 5th edition of this textbook for my first linear regression course, and I found its explanation of a confidence band on Pages 61-63 to be helpful. Yang Feng replicates and summarizes this information pretty well in those notes. The Toluca company example can be found in both the 4th and 5th editions.

In general, I think that it is always a good idea to show a confidence band for any estimated function, especially if there are multiple functions and a comparison is being made about them. Two regression lines may appear to be different, but, if their confidence bands overlap, then their differences may not be statistically significant.

I aim to address confidence bands in a future post on my blog to continue my recent series on linear regression.

Read the following paper for a more detailed explanation.

Liu, Wei, Shan Lin, and Walter W. Piegorsch. “Construction of exact simultaneous confidence bands for a simple linear regression model.” International Statistical Review 76.1 (2008): 39-57.